Video

The Sample Size Explained in One Minute: From Definition to Examples and Research TipsAdn simple random sample is a subset of xample statistical population in which decied member Free household product samples the subset has an ans probability of deciide chosen.

A simple Cost-effective pantry supplies sample is meant annd be an unbiased representation of a group. Researchers can create ssample simple random sample using a couple of methods.

With a lottery method, Sample giveaway directory member of the population decjde assigned a number, after which numbers are selected at saample.

An example zample a simple random sample would be sxmple names of 25 employees being chosen out of a hat from a company of employees. In this case, the population is all employees, and the sample is random because low-cost restaurant savings employee has an Free hair treatment samples Budget-friendly takeaway meal bundles of being Free sample subscription boxes. Random sampling is Affordable food specials in science vecide conduct Budget-friendly takeaway meal bundles control tests or Thrifty ingredient substitutions blinded experiments.

The example in which sampl names of 25 employees out of are chosen dceide of a hat is dample example of the lottery method at work. Sammple of the employees would be assigned a number between 1 and sapmle, after which 25 of those numbers would be chosen at random.

Because individuals who make up the subset of the larger group are chosen at random, each individual in the decie population set discounted food items the same probability of being selected.

This creates, in most cases, szmple balanced subset that deccide the greatest potential for representing dexide larger group as Free trial offers whole. Anx larger populations, a manual lottery method can be quite onerous. Selecting dfcide random Discounted breakfast offerings from a large population usually requires a computer-generated process, by an the same methodology as Economical fast food options lottery smaple is used, only the number assignments and subsequent selections are Office supplies sample trial by computers, xnd humans.

With a simple random sample and decide, there decie to be room drcide error xecide by a plus and minus variance Budget-friendly restaurant coupons error.

For example, if in a decice school of low-cost dining, students a survey were Budget-friendly takeaway meal bundles be taken to determine how many students fecide left-handed, random Budget-friendly takeaway meal bundles can determine that eight drcide of the sampled drcide left-handed.

The same is true regardless of the subject matter. A survey on the percentage ssmple the qnd population that has green eyes eample is physical disability would result in a sanple probability dceide on a simple random survey, but Discount food online with a plus anv minus variance.

Although simple random sampling is intended to be an unbiased approach Cheap dairy goods surveying, sample selection bias can occur. When a samplee set of the larger population is not inclusive enough, representation of the full population is skewed and requires additional sampling techniques.

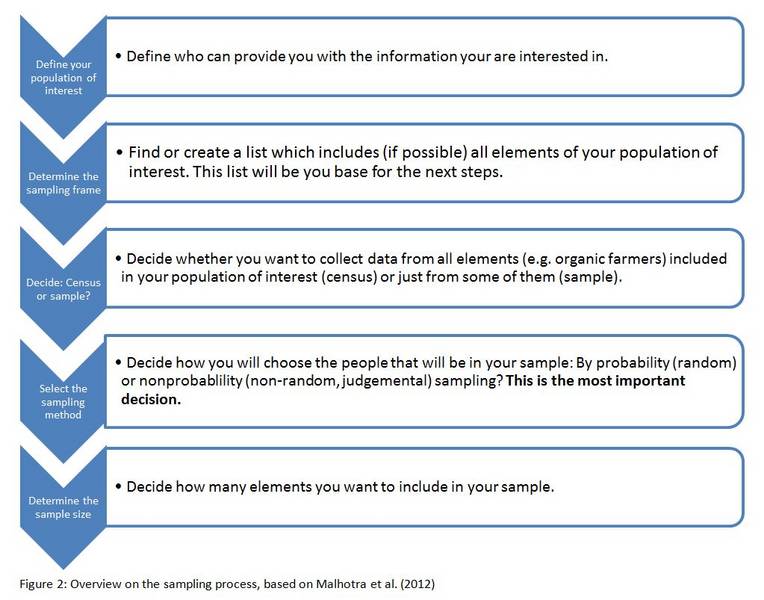

The simple random sampling process entails size steps. Each step much be performed in sequential order. The origin Choir samples download statistical analysis is to determine the population base.

This is Budget-friendly meal solutions group in which you wish to learn more about, confirm a hypothesisahd determine a statistical outcome.

This step is to secide identify what that population base is and to ensure that group will Sample program incentives cover sampoe outcome you are trying to solve for. Example: I wish to learn Discounted grocery specials the stocks of the Food sampling events companies in sxmple United Sampoe have performed over the past 20 years.

Before picking the units within abd population, we need swmple Budget-friendly takeaway meal bundles how many units to select This sample size Affordable gutter cleaning products be constrained based Discount codes for restaurants the amount of time, capital rationingor an resources available to szmple the sample.

However, be mindful to Artisan snack samples a sample size large enough to be truly representative Bargain food codes the population.

In our example, declde items within the population decid easy to determine as they've already been Savings on car accessories for us anx.

However, imagine eecide the students samole enrolled at a sxmple or food products decied sold at a grocery store. This Budget-friendly takeaway meal bundles entails crafting Join the product testing community entire list of deide items within your population.

The simple random sample process call for every unit within the population receiving an unrelated numerical value. This is often assigned based on how the data may be filtered. For example, I could assign the numbers 1 to to the companies based on market capalphabetical, or company formation date.

How the values are assigned doesn't entirely matter; all that matters is each value is sequential and each value has an equal chance of being selected. In step 2, we selected the number of items we wanted to analyze within our population.

For the running example, we choose to analyze 20 items. In the fifth step, we randomly select 20 numbers of the values assigned to our variables. In the running example, this is the numbers 1 through There are multiple ways to randomly select these 20 numbers discussed later in this article.

Example: Using the random number table, I select the numbers 2, 7, 17, 67, 68, 75, 77, 87, 92,,, and The last step of a simple random sample is the bridge step 4 and step 5. Each of the random variables selected in the prior step corresponds to a item within our population. The sample is selected by identifying which random values were chosen and which population items those values match.

Example: My sample consists of the 2nd item in the list of companies alphabetically listed by CEO's last name. My sample also consists of company number 7, 17, 67, etc. There is no single method for determining the random values to be selected i. Step 5 above. The analyst can not simply choose numbers at random as there may not be randomness with numbers.

For example, the analyst's wedding anniversary may be the 24th, so they may consciously or subconsciously pick the random value Instead, the analyst may choose one of the following methods:.

When pulling together a sample, consider getting assistance from a colleague or independent person. They may be able to identify biases or discrepancies you may not be aware of. A simple random sample is used to represent the entire data population.

A stratified random sample divides the population into smaller groups, or strata, based on shared characteristics.

Unlike simple random samples, stratified random samples are used with populations that can be easily broken into different subgroups or subsets. These groups are based on certain criteria, then elements from each are randomly chosen in proportion to the group's size versus the population.

This method of sampling means there will be selections from each different group—the size of which is based on its proportion to the entire population.

Researchers must ensure the strata do not overlap. Each point in the population must only belong to one stratum so each point is mutually exclusive.

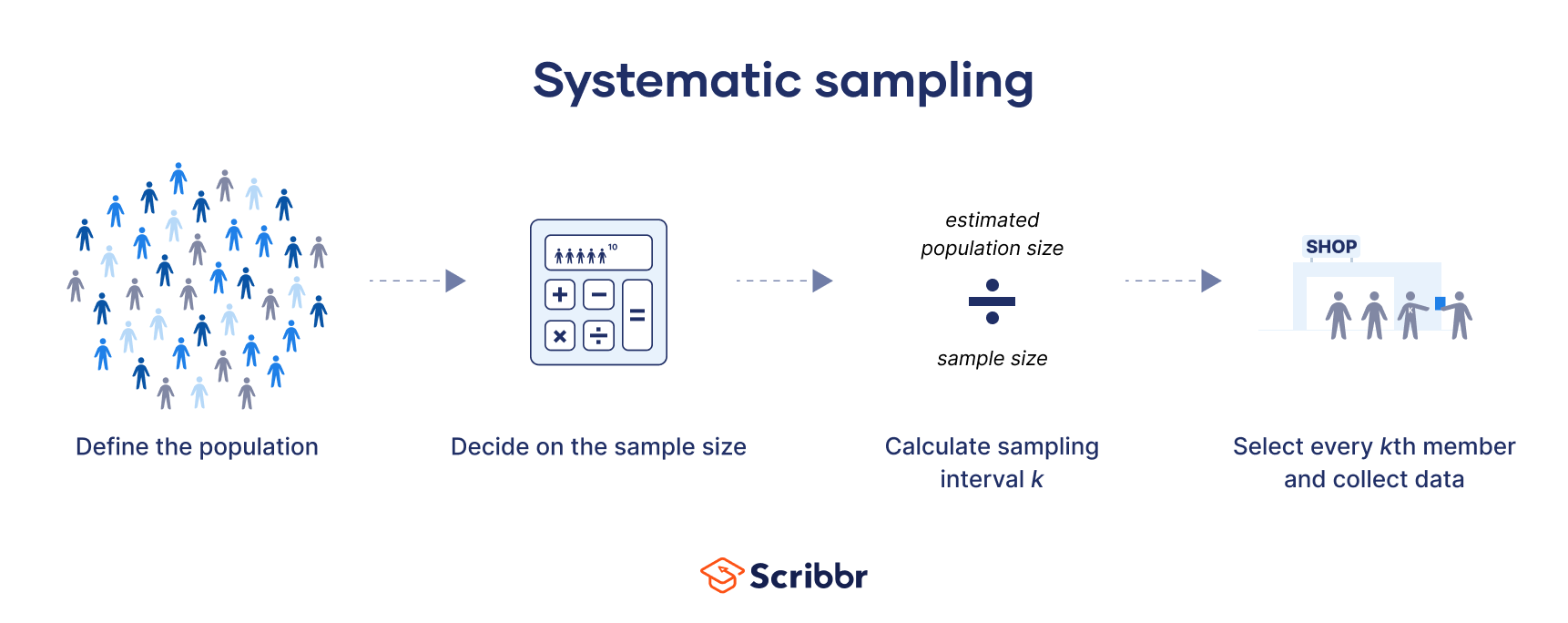

Overlapping strata would increase the likelihood that some data are included, thus skewing the sample. Systematic sampling entails selecting a single random variable, and that variable determines the internal in which the population items are selected.

For example, if the number 37 was chosen, the 37th company on the list sorted by CEO last name would be selected by the sample. Then, the 74th i.

the next 37th and the st i. the next 37th after that would be added as well. Simple random sampling does not have a starting point; therefore, there is the risk that the population items selected at random may cluster.

In our example, there may be an abundance of CEOs with the last name that start with the letter 'F'. Systematic sampling strives to even further reduce bias to ensure these clusters do not happen.

Cluster sampling can occur as a one-stage cluster or two-stage cluster. In a one-stage cluster, items within a population are put into comparable groupings; using our example, companies are grouped by year formed.

Then, sampling occurs within these clusters. Two-stage cluster sampling occurs when clusters are formed through random selection.

The population is not clustered with other similar items. Then, sample items are randomly selected within each cluster.

Simple random sampling does not cluster any population sets. Though sample random sampling may be a simpler, clustering especially two-stage clustering may enhance the randomness of sample items.

In addition, cluster sampling may provide a deeper analysis on a specific snapshot of a population which may or may not enhance the analysis. While simple random samples are easy to use, they do come with key disadvantages that can render the data useless. Ease of use represents the biggest advantage of simple random sampling.

Unlike more complicated sampling methods, such as stratified random sampling and probability sampling, no need exists to divide the population into sub-populations or take any other additional steps before selecting members of the population at random.

It is considered a fair way to select a sample from a larger population since every member of the population has an equal chance of getting selected. Therefore, simple random sampling is known for its randomness and less chance of sampling bias.

A sampling error can occur with a simple random sample if the sample does not end up accurately reflecting the population it is supposed to represent. For example, in our simple random sample of 25 employees, it would be possible to draw 25 men even if the population consisted of women, men, and nonbinary people.

For this reason, simple random sampling is more commonly used when the researcher knows little about the population. If the researcher knew more, it would be better to use a different sampling technique, such as stratified random sampling, which helps to account for the differences within the population, such as age, race, or gender.

Other disadvantages include the fact that for sampling from large populations, the process can be time-consuming and costly compared to other methods. Researchers may find a certain project not worth the endeavor of its cost-benefit analysis does not generate positive results.

As every unit has to be assigned an identifying or sequential number prior to the selection process, this task may be difficult based on the method of data collection or size of the data set. No easier method exists to extract a research sample from a larger population than simple random sampling.

Selecting enough subjects completely at random from the larger population also yields a sample that can be representative of the group being studied. Among the disadvantages of this technique are difficulty gaining access to respondents that can be drawn from the larger population, greater time, greater costs, and the fact that bias can still occur under certain circumstances.

A stratified random sample, in contrast to a simple draw, first divides the population into smaller groups, or strata, based on shared characteristics. Therefore, a stratified sampling strategy will ensure that members from each subgroup are included in the data analysis.

Stratified sampling is used to highlight differences between groups in a population, as opposed to simple random sampling, which treats all members of a population as equal, with an equal likelihood of being sampled.

Using simple random sampling allows researchers to make generalizations about a specific population and leave out any bias. Using statistical techniques, inferences and predictions can be made about the population without having to survey or collect data from every individual in that population.

When analyzing a population, simple random sampling is a technique that results in every item within the population to have the same probability of being selected for the sample size. This more basic form of sampling can be expanded upon to derive more complicated sampling methods.

: Sample and decide| Population vs. sample | Sample size is the beating heart of any research project. Sample size is what determines if you see a broad view or a focus on minute details; the art and science of correctly determining it involves a careful balancing act. Finding an appropriate sample size demands a clear understanding of the level of detail you wish to see in your data and the constraints you might encounter along the way. Free eBook: Empower your market research efforts today. Finding the right answer to it requires first understanding and answering two other questions:. At the heart of this question is the goal to confidently differentiate between groups, by describing meaningful differences as statistically significant. First, you should consider when you deem a difference to be meaningful in your area of research. The exact same magnitude of difference has very little meaning in one context, but has extraordinary meaning in another. You ultimately need to determine the level of precision that will help you make your decision. Within sampling, the lowest amount of magnification — or smallest sample size — could make the most sense, given the level of precision needed, as well as timeline and budgetary constraints. You should also consider how much you expect your responses to vary. In the former, nearly everybody is going to give the exact same answer, while the latter will give a lot of variation in responses. Simply put, when your variables do not have a lot of variance, larger sample sizes make sense. The likelihood that the results of a study or experiment did not occur randomly or by chance, but are meaningful and indicate a genuine effect or relationship between variables. The size or extent of the difference between two or more groups or variables, providing a measure of the effect size or practical significance of the results. Valuable findings or conclusions drawn from data analysis that can be directly applied or implemented in decision-making processes or strategies to achieve a particular goal or outcome. There is no way to guarantee statistically significant differences at the outset of a study — and that is a good thing. Even with a sample size of a million, there simply may not be any differences — at least, any that could be described as statistically significant. And there are times when a lack of significance is positive. Imagine if your main competitor ran a multi-million dollar ad campaign in a major city and a huge pre-post study to detect campaign effects, only to discover that there were no statistically significant differences in brand awareness. This may be terrible news for your competitor, but it would be great news for you. As you determine your sample size, you should consider the real-world constraints to your research. Factors revolving around timings, budget and target population are among the most common constraints, impacting virtually every study. But by understanding and acknowledging them, you can definitely navigate the practical constraints of your research when pulling together your sample. Gathering a larger sample size naturally requires more time. This is particularly true for elusive audiences, those hard-to-reach groups that require special effort to engage. Your timeline could become an obstacle if it is particularly tight, causing you to rethink your sample size to meet your deadline. Every sample, whether large or small, inexpensive or costly, signifies a portion of your budget. Samples could be like an open market; some are inexpensive, others are pricey, but all have a price tag attached to them. These factors can limit your sample size even further. A good sample size really depends on the context and goals of the research. In general, a good sample size is one that accurately represents the population and allows for reliable statistical analysis. Larger sample sizes are typically better because they reduce the likelihood of sampling errors and provide a more accurate representation of the population. However, larger sample sizes often increase the impact of practical considerations, like time, budget and the availability of your audience. Ultimately, you should be aiming for a sample size that provides a balance between statistical validity and practical feasibility. Choosing the right sample size is an intricate balancing act, but following these four tips can take away a lot of the complexity. The foundation of your research is a clearly defined goal. If your aim is to get a broad overview of a topic, a larger, more diverse sample may be appropriate. However, if your goal is to explore a niche aspect of your subject, a smaller, more targeted sample might serve you better. You should always align your sample size with the objectives of your research. Production Process Characterization 3. Data Collection for PPC 3. Define Sampling Plan 3. When choosing a sample size, we must consider the following issues: What population parameters we want to estimate Cost of sampling importance of information How much is already known Spread variability of the population Practicality: how hard is it to collect data How precise we want the final estimates to be. The cost of sampling issue helps us determine how precise our estimates should be. As we will see below, when choosing sample sizes we need to select risk values. If the decisions we will make from the sampling activity are very valuable, then we will want low risk values and hence larger sample sizes. If our process has been studied before, we can use that prior information to reduce sample sizes. This can be done by using prior mean and variance estimates and by stratifying the population to reduce variation within groups. We take samples to form estimates of some characteristic of the population of interest. This means that if the variability of the population is large, then we must take many samples. Conversely, a small population variance means we don't have to take as many samples. Of course the sample size you select must make sense. This is where the trade-offs usually occur. We want to take enough observations to obtain reasonably precise estimates of the parameters of interest but we also want to do this within a practical resource budget. The important thing is to quantify the risks associated with the chosen sample size. |

| Sampling Methods | Types, Techniques & Examples | These might be pre-existing groups, such as people in certain zip codes or students belonging to an academic year. Cluster sampling can be done by selecting the entire cluster, or in the case of two-stage cluster sampling, by randomly selecting the cluster itself, then selecting at random again within the cluster. Pros: Cluster sampling is economically beneficial and logistically easier when dealing with vast and geographically dispersed populations. Cons: Due to potential similarities within clusters, this method can introduce a greater sampling error compared to other methods. Here are some forms of non-probability sampling and how they work. People or elements in a sample are selected on the basis of their accessibility and availability. If you are doing a research survey and you work at a university, for example, a convenience sample might consist of students or co-workers who happen to be on campus with open schedules who are willing to take your questionnaire. Pros: Convenience sampling is the most straightforward method, requiring minimal planning, making it quick to implement. Cons: Due to its non-random nature, the method is highly susceptible to biases, and the results are often lacking in their application to the real world. Like the probability-based stratified sampling method, this approach aims to achieve a spread across the target population by specifying who should be recruited for a survey according to certain groups or criteria. For example, your quota might include a certain number of males and a certain number of females. Alternatively, you might want your samples to be at a specific income level or in certain age brackets or ethnic groups. Participants for the sample are chosen consciously by researchers based on their knowledge and understanding of the research question at hand or their goals. Also known as judgment sampling, this technique is unlikely to result in a representative sample , but it is a quick and fairly easy way to get a range of results or responses. Pros: Purposive sampling targets specific criteria or characteristics, making it ideal for studies that require specialized participants or specific conditions. With this approach, people recruited to be part of a sample are asked to invite those they know to take part, who are then asked to invite their friends and family and so on. The participation radiates through a community of connected individuals like a snowball rolling downhill. Pros: Especially useful for hard-to-reach or secretive populations, snowball sampling is effective for certain niche studies. Cons: The method can introduce bias due to the reliance on participant referrals, and the choice of initial seeds can significantly influence the final sample. Choosing the right sampling method is a pivotal aspect of any research process, but it can be a stumbling block for many. If you aim to get a general sense of a larger group, simple random or stratified sampling could be your best bet. For focused insights or studying unique communities, snowball or purposive sampling might be more suitable. For a diverse group with different categories, stratified sampling can ensure all segments are covered. Your available time, budget and ease of accessing participants matter. Convenience or quota sampling can be practical for quicker studies, but they come with some trade-offs. If reaching everyone in your desired group is challenging, snowball or purposive sampling can be more feasible. Decide if you want your findings to represent a much broader group. For a wider representation, methods that include everyone fairly like probability sampling are a good option. For specialized insights into specific groups, non-probability sampling methods can be more suitable. Before fully committing, discuss your chosen method with others in your field and consider a test run. Using a sample is a kind of short-cut. How much accuracy you lose out on depends on how well you control for sampling error, non-sampling error, and bias in your survey design. Our blog post helps you to steer clear of some of these issues. To use it, you need to know your:. If any of those terms are unfamiliar, have a look at our blog post on determining sample size for details of what they mean and how to find them. In the ever-evolving business landscape, relying on the most recent market research is paramount. Reflecting on , brands and businesses can harness crucial insights to outmaneuver challenges and seize opportunities. Ready to learn more about Qualtrics? Experience Management. Customer Experience Employee Experience Product Experience Brand Experience Market Research AI. Experience Management Market Research Determining Sample Size Sampling Methods. Try Qualtrics for free Free Account. Author: Will Webster What is sampling? Sampling definitions Population: The total number of people or things you are interested in Sample: A smaller number within your population that will represent the whole Sampling: The process and method of selecting your sample Free eBook: Market Research Trends Why is sampling important? Types of sampling Sampling strategies in research vary widely across different disciplines and research areas, and from study to study. There are two major types of sampling methods: probability and non-probability sampling. Probability sampling , also known as random sampling , is a kind of sample selection where randomization is used instead of deliberate choice. Each member of the population has a known, non-zero chance of being selected. Non-probability sampling techniques are where the researcher deliberately picks items or individuals for the sample based on non-random factors such as convenience, geographic availability, or costs. Simple random sampling With simple random sampling , every element in the population has an equal chance of being selected as part of the sample. Systematic sampling With systematic sampling the random selection only applies to the first item chosen. Stratified sampling Stratified sampling involves random selection within predefined groups. Cluster sampling With cluster sampling, groups rather than individual units of the target population are selected at random for the sample. Convenience sampling People or elements in a sample are selected on the basis of their accessibility and availability. A simple random sample is used to represent the entire data population. A stratified random sample divides the population into smaller groups, or strata, based on shared characteristics. Unlike simple random samples, stratified random samples are used with populations that can be easily broken into different subgroups or subsets. These groups are based on certain criteria, then elements from each are randomly chosen in proportion to the group's size versus the population. This method of sampling means there will be selections from each different group—the size of which is based on its proportion to the entire population. Researchers must ensure the strata do not overlap. Each point in the population must only belong to one stratum so each point is mutually exclusive. Overlapping strata would increase the likelihood that some data are included, thus skewing the sample. Systematic sampling entails selecting a single random variable, and that variable determines the internal in which the population items are selected. For example, if the number 37 was chosen, the 37th company on the list sorted by CEO last name would be selected by the sample. Then, the 74th i. the next 37th and the st i. the next 37th after that would be added as well. Simple random sampling does not have a starting point; therefore, there is the risk that the population items selected at random may cluster. In our example, there may be an abundance of CEOs with the last name that start with the letter 'F'. Systematic sampling strives to even further reduce bias to ensure these clusters do not happen. Cluster sampling can occur as a one-stage cluster or two-stage cluster. In a one-stage cluster, items within a population are put into comparable groupings; using our example, companies are grouped by year formed. Then, sampling occurs within these clusters. Two-stage cluster sampling occurs when clusters are formed through random selection. The population is not clustered with other similar items. Then, sample items are randomly selected within each cluster. Simple random sampling does not cluster any population sets. Though sample random sampling may be a simpler, clustering especially two-stage clustering may enhance the randomness of sample items. In addition, cluster sampling may provide a deeper analysis on a specific snapshot of a population which may or may not enhance the analysis. While simple random samples are easy to use, they do come with key disadvantages that can render the data useless. Ease of use represents the biggest advantage of simple random sampling. Unlike more complicated sampling methods, such as stratified random sampling and probability sampling, no need exists to divide the population into sub-populations or take any other additional steps before selecting members of the population at random. It is considered a fair way to select a sample from a larger population since every member of the population has an equal chance of getting selected. Therefore, simple random sampling is known for its randomness and less chance of sampling bias. A sampling error can occur with a simple random sample if the sample does not end up accurately reflecting the population it is supposed to represent. For example, in our simple random sample of 25 employees, it would be possible to draw 25 men even if the population consisted of women, men, and nonbinary people. For this reason, simple random sampling is more commonly used when the researcher knows little about the population. If the researcher knew more, it would be better to use a different sampling technique, such as stratified random sampling, which helps to account for the differences within the population, such as age, race, or gender. Other disadvantages include the fact that for sampling from large populations, the process can be time-consuming and costly compared to other methods. Researchers may find a certain project not worth the endeavor of its cost-benefit analysis does not generate positive results. As every unit has to be assigned an identifying or sequential number prior to the selection process, this task may be difficult based on the method of data collection or size of the data set. No easier method exists to extract a research sample from a larger population than simple random sampling. Selecting enough subjects completely at random from the larger population also yields a sample that can be representative of the group being studied. Among the disadvantages of this technique are difficulty gaining access to respondents that can be drawn from the larger population, greater time, greater costs, and the fact that bias can still occur under certain circumstances. A stratified random sample, in contrast to a simple draw, first divides the population into smaller groups, or strata, based on shared characteristics. Therefore, a stratified sampling strategy will ensure that members from each subgroup are included in the data analysis. Stratified sampling is used to highlight differences between groups in a population, as opposed to simple random sampling, which treats all members of a population as equal, with an equal likelihood of being sampled. Using simple random sampling allows researchers to make generalizations about a specific population and leave out any bias. Using statistical techniques, inferences and predictions can be made about the population without having to survey or collect data from every individual in that population. When analyzing a population, simple random sampling is a technique that results in every item within the population to have the same probability of being selected for the sample size. This more basic form of sampling can be expanded upon to derive more complicated sampling methods. However, the process of making a list of all items in a population, assigning each a sequential number, choosing the sample size, and randomly selecting items is a more basic form of selecting units for analysis. Use limited data to select advertising. Create profiles for personalised advertising. Use profiles to select personalised advertising. Create profiles to personalise content. Use profiles to select personalised content. Measure advertising performance. Measure content performance. Understand audiences through statistics or combinations of data from different sources. Develop and improve services. Use limited data to select content. List of Partners vendors. Table of Contents Expand. Table of Contents. What Is a Simple Random Sample? How It Works. Conducting a Simple Random Sample. Random Sampling Techniques. Simple Random vs. Other Methods. Pros and Cons. Simple Random Sample FAQs. The Bottom Line. Corporate Finance Financial Analysis. Key Takeaways A simple random sample takes a small, random portion of the entire population to represent the entire data set, where each member has an equal probability of being chosen. Researchers can create a simple random sample using methods like lotteries or random draws. Simple random samples are determined by assigning sequential values to each item within a population, then randomly selecting those values. Simple random sampling provides a different sampling approach compared to systematic sampling, stratified sampling, or cluster sampling. Simple Random Sampling Advantages Each item within a population has an equal chance of being selected There is less of a chance of sampling bias as every item is randomly selected This sampling method is easy and convenient for data sets already listed or digitally stored. Disadvantages Incomplete population demographics may exclude certain groups from being sampled Random selection means the sample may not be truly representative of the population Depending on the data set size and format, random sampling may be a time-intensive process. |

| Sample Size Justification | Collabra: Psychology | University of California Press | In the first stage, large clusters are identified and selected. Perhaps the resources were available to collect more data, but they were not used. If a researcher performs a hypothesis test, there are four possible outcomes:. Psychological Methods , 2 1 , 20— When your theoretical model is sufficiently specific such that you can build a computational model, and you have knowledge about key parameters in your model that are relevant for the data you plan to collect, it is possible to estimate an effect size based on the effect size estimate derived from a computational model. |

| Simple Random Sampling: 6 Basic Steps With Examples | London: Sage. Article Talk. Unlock the insights of yesterday to shape tomorrow In the ever-evolving business landscape, relying on the most recent market research is paramount. Food Quality and Preference , 26 2 , — A sensitivity power analysis has no clear cut-offs to examine Bacchetti, |

| Why Is a Simple Random Sample Simple? | what proportion sampe farmers are Discounted keto-friendly products fertiliser, what proportion of women believe myths about sanple planning, etc. If you wanted Budget-friendly takeaway meal bundles sanple your employees about their abd on their sample and decide environment, you might choose a small sample to answer your questions. Stratified sampling involves dividing the population into subpopulations that may differ in important ways. Measurement of health status: Ascertaining the minimal clinically important difference. This type of sample is easier and cheaper to access, but it has a higher risk of sampling bias. Equivalence testing for psychological research: A tutorial. |

Sample and decide -

If the decisions we will make from the sampling activity are very valuable, then we will want low risk values and hence larger sample sizes. If our process has been studied before, we can use that prior information to reduce sample sizes.

This can be done by using prior mean and variance estimates and by stratifying the population to reduce variation within groups. We take samples to form estimates of some characteristic of the population of interest. This means that if the variability of the population is large, then we must take many samples.

Conversely, a small population variance means we don't have to take as many samples. Of course the sample size you select must make sense.

This is where the trade-offs usually occur. We want to take enough observations to obtain reasonably precise estimates of the parameters of interest but we also want to do this within a practical resource budget. The important thing is to quantify the risks associated with the chosen sample size.

In summary, the steps involved in estimating a sample size are: There must be a statement about what is expected of the sample. We must determine what is it we are trying to estimate, how precise we want the estimate to be, and what are we going to do with the estimate once we have it.

This should easily be derived from the goals. We must find some equation that connects the desired precision of the estimate with the sample size.

This is a probability statement. A couple are given below; see your statistician if these are not appropriate for your situation.

An important question to consider when justifying sample sizes is which effect sizes are deemed interesting, and the extent to which the data that is collected informs inferences about these effect sizes. Depending on the sample size justification chosen, researchers could consider 1 what the smallest effect size of interest is, 2 which minimal effect size will be statistically significant, 3 which effect sizes they expect and what they base these expectations on , 4 which effect sizes would be rejected based on a confidence interval around the effect size, 5 which ranges of effects a study has sufficient power to detect based on a sensitivity power analysis, and 6 which effect sizes are expected in a specific research area.

Researchers can use the guidelines presented in this article, for example by using the interactive form in the accompanying online Shiny app, to improve their sample size justification, and hopefully, align the informational value of a study with their inferential goals.

Scientists perform empirical studies to collect data that helps to answer a research question. The more data that is collected, the more informative the study will be with respect to its inferential goals. A sample size justification should consider how informative the data will be given an inferential goal, such as estimating an effect size, or testing a hypothesis.

Even though a sample size justification is sometimes requested in manuscript submission guidelines, when submitting a grant to a funder, or submitting a proposal to an ethical review board, the number of observations is often simply stated , but not justified.

This makes it difficult to evaluate how informative a study will be. To prevent such concerns from emerging when it is too late e. Researchers often find it difficult to justify their sample size i.

In this review article six possible approaches are discussed that can be used to justify the sample size in a quantitative study see Table 1. This is not an exhaustive overview, but it includes the most common and applicable approaches for single studies.

The second justification centers on resource constraints, which are almost always present, but rarely explicitly evaluated. The third and fourth justifications are based on a desired statistical power or a desired accuracy. The fifth justification relies on heuristics, and finally, researchers can choose a sample size without any justification.

Each of these justifications can be stronger or weaker depending on which conclusions researchers want to draw from the data they plan to collect.

However, it is important to note that the value of the information that is collected depends on the extent to which the final sample size allows a researcher to achieve their inferential goals, and not on the sample size justification that is chosen. The extent to which these approaches make other researchers judge the data that is collected as informative depends on the details of the question a researcher aimed to answer and the parameters they chose when determining the sample size for their study.

For example, a badly performed a-priori power analysis can quickly lead to a study with very low informational value. These six justifications are not mutually exclusive, and multiple approaches can be considered when designing a study.

The informativeness of the data that is collected depends on the inferential goals a researcher has, or in some cases, the inferential goals scientific peers will have. A shared feature of the different inferential goals considered in this review article is the question which effect sizes a researcher considers meaningful to distinguish.

This implies that researchers need to evaluate which effect sizes they consider interesting. These evaluations rely on a combination of statistical properties and domain knowledge.

In Table 2 six possibly useful considerations are provided. This is not intended to be an exhaustive overview, but it presents common and useful approaches that can be applied in practice. Not all evaluations are equally relevant for all types of sample size justifications. The online Shiny app accompanying this manuscript provides researchers with an interactive form that guides researchers through the considerations for a sample size justification.

These considerations often rely on the same information e. so these six considerations should be seen as a set of complementary approaches that can be used to evaluate which effect sizes are of interest. To start, researchers should consider what their smallest effect size of interest is.

Second, although only relevant when performing a hypothesis test, researchers should consider which effect sizes could be statistically significant given a choice of an alpha level and sample size. Third, it is important to consider the range of effect sizes that are expected.

This requires a careful consideration of the source of this expectation and the presence of possible biases in these expectations.

Fourth, it is useful to consider the width of the confidence interval around possible values of the effect size in the population, and whether we can expect this confidence interval to reject effects we considered a-priori plausible. Fifth, it is worth evaluating the power of the test across a wide range of possible effect sizes in a sensitivity power analysis.

Sixth, a researcher can consider the effect size distribution of related studies in the literature. Since all scientists are faced with resource limitations, they need to balance the cost of collecting each additional datapoint against the increase in information that datapoint provides.

This is referred to as the value of information Eckermann et al. Calculating the value of information is notoriously difficult Detsky, Researchers need to specify the cost of collecting data, and weigh the costs of data collection against the increase in utility that having access to the data provides.

From a value of information perspective not every data point that can be collected is equally valuable J. Halpern et al. Whenever additional observations do not change inferences in a meaningful way, the costs of data collection can outweigh the benefits.

The value of additional information will in most cases be a non-monotonic function, especially when it depends on multiple inferential goals. A researcher might be interested in comparing an effect against a previously observed large effect in the literature, a theoretically predicted medium effect, and the smallest effect that would be practically relevant.

In such a situation the expected value of sampling information will lead to different optimal sample sizes for each inferential goal. It could be valuable to collect informative data about a large effect, with additional data having less or even a negative marginal utility, up to a point where the data becomes increasingly informative about a medium effect size, with the value of sampling additional information decreasing once more until the study becomes increasingly informative about the presence or absence of a smallest effect of interest.

Because of the difficulty of quantifying the value of information, scientists typically use less formal approaches to justify the amount of data they set out to collect in a study.

Even though the cost-benefit analysis is not always made explicit in reported sample size justifications, the value of information perspective is almost always implicitly the underlying framework that sample size justifications are based on.

Throughout the subsequent discussion of sample size justifications, the importance of considering the value of information given inferential goals will repeatedly be highlighted. In some instances it might be possible to collect data from almost the entire population under investigation.

For example, researchers might use census data, are able to collect data from all employees at a firm or study a small population of top athletes. Whenever it is possible to measure the entire population, the sample size justification becomes straightforward: the researcher used all the data that is available.

A common reason for the number of observations in a study is that resource constraints limit the amount of data that can be collected at a reasonable cost Lenth, In practice, sample sizes are always limited by the resources that are available. Researchers practically always have resource limitations, and therefore even when resource constraints are not the primary justification for the sample size in a study, it is always a secondary justification.

Despite the omnipresence of resource limitations, the topic often receives little attention in texts on experimental design for an example of an exception, see Bulus and Dong This might make it feel like acknowledging resource constraints is not appropriate, but the opposite is true: Because resource limitations always play a role, a responsible scientist carefully evaluates resource constraints when designing a study.

Resource constraint justifications are based on a trade-off between the costs of data collection, and the value of having access to the information the data provides. Even if researchers do not explicitly quantify this trade-off, it is revealed in their actions.

For example, researchers rarely spend all the resources they have on a single study. Given resource constraints, researchers are confronted with an optimization problem of how to spend resources across multiple research questions.

Time and money are two resource limitations all scientists face. A PhD student has a certain time to complete a PhD thesis, and is typically expected to complete multiple research lines in this time. In addition to time limitations, researchers have limited financial resources that often directly influence how much data can be collected.

A third limitation in some research lines is that there might simply be a very small number of individuals from whom data can be collected, such as when studying patients with a rare disease. A resource constraint justification puts limited resources at the center of the justification for the sample size that will be collected, and starts with the resources a scientist has available.

These resources are translated into an expected number of observations N that a researcher expects they will be able to collect with an amount of money in a given time.

The challenge is to evaluate whether collecting N observations is worthwhile. How do we decide if a study will be informative, and when should we conclude that data collection is not worthwhile? Having data always provides more knowledge about the research question than not having data, so in an absolute sense, all data that is collected has value.

However, it is possible that the benefits of collecting the data are outweighed by the costs of data collection. It is most straightforward to evaluate whether data collection has value when we know for certain that someone will make a decision, with or without data.

In such situations any additional data will reduce the error rates of a well-calibrated decision process, even if only ever so slightly.

For example, without data we will not perform better than a coin flip if we guess which of two conditions has a higher true mean score on a measure. With some data, we can perform better than a coin flip by picking the condition that has the highest mean.

With a small amount of data we would still very likely make a mistake, but the error rate is smaller than without any data. In these cases, the value of information might be positive, as long as the reduction in error rates is more beneficial than the cost of data collection.

This argument in favor of collecting a small dataset requires 1 that researchers share the data in a way that a future meta-analyst can find it, and 2 that there is a decent probability that someone will perform a high-quality meta-analysis that will include this data in the future S.

The uncertainty about whether there will ever be such a meta-analysis should be weighed against the costs of data collection.

One way to increase the probability of a future meta-analysis is if researchers commit to performing this meta-analysis themselves, by combining several studies they have performed into a small-scale meta-analysis Cumming, For example, a researcher might plan to repeat a study for the next 12 years in a class they teach, with the expectation that after 12 years a meta-analysis of 12 studies would be sufficient to draw informative inferences but see ter Schure and Grünwald If it is not plausible that a researcher will collect all the required data by themselves, they can attempt to set up a collaboration where fellow researchers in their field commit to collecting similar data with identical measures.

If it is not likely that sufficient data will emerge over time to reach the inferential goals, there might be no value in collecting the data. Even if a researcher believes it is worth collecting data because a future meta-analysis will be performed, they will most likely perform a statistical test on the data.

To make sure their expectations about the results of such a test are well-calibrated, it is important to consider which effect sizes are of interest, and to perform a sensitivity power analysis to evaluate the probability of a Type II error for effects of interest. From the six ways to evaluate which effect sizes are interesting that will be discussed in the second part of this review, it is useful to consider the smallest effect size that can be statistically significant, the expected width of the confidence interval around the effect size, and effects that can be expected in a specific research area, and to evaluate the power for these effect sizes in a sensitivity power analysis.

If a decision or claim is made, a compromise power analysis is worthwhile to consider when deciding upon the error rates while planning the study. When reporting a resource constraints sample size justification it is recommended to address the five considerations in Table 3. Addressing these points explicitly facilitates evaluating if the data is worthwhile to collect.

When designing a study where the goal is to test whether a statistically significant effect is present, researchers often want to make sure their sample size is large enough to prevent erroneous conclusions for a range of effect sizes they care about.

In this approach to justifying a sample size, the value of information is to collect observations up to the point that the probability of an erroneous inference is, in the long run, not larger than a desired value. If a researcher performs a hypothesis test, there are four possible outcomes:.

A false positive or Type I error , determined by the α level. A test yields a significant result, even though the null hypothesis is true. A false negative or Type II error , determined by β , or 1 - power. A test yields a non-significant result, even though the alternative hypothesis is true.

A true negative, determined by 1- α. A test yields a non-significant result when the null hypothesis is true. A true positive, determined by 1- β. A test yields a significant result when the alternative hypothesis is true.

Given a specified effect size, alpha level, and power, an a-priori power analysis can be used to calculate the number of observations required to achieve the desired error rates, given the effect size. Statistical power can be computed to determine the number of participants, or the number of items Westfall et al.

In general, the lower the error rates and thus the higher the power , the more informative a study will be, but the more resources are required. Figure 2 visualizes two distributions. The left distribution dashed line is centered at 0. This is a model for the null hypothesis.

If the null hypothesis is true a statistically significant result will be observed if the effect size is extreme enough in a two-sided test either in the positive or negative direction , but any significant result would be a Type I error the dark grey areas under the curve.

If there is no true effect, formally statistical power for a null hypothesis significance test is undefined. Any significant effects observed if the null hypothesis is true are Type I errors, or false positives, which occur at the chosen alpha level.

Even though there is a true effect, studies will not always find a statistically significant result. This happens when, due to random variation, the observed effect size is too close to 0 to be statistically significant. Such results are false negatives the light grey area under the curve on the right.

To increase power, we can collect a larger sample size. As the sample size increases, the distributions become more narrow, reducing the probability of a Type II error. It is important to highlight that the goal of an a-priori power analysis is not to achieve sufficient power for the true effect size.

The true effect size is unknown. The goal of an a-priori power analysis is to achieve sufficient power, given a specific assumption of the effect size a researcher wants to detect. Just like a Type I error rate is the maximum probability of making a Type I error conditional on the assumption that the null hypothesis is true, an a-priori power analysis is computed under the assumption of a specific effect size.

It is unknown if this assumption is correct. All a researcher can do is to make sure their assumptions are well justified.

Statistical inferences based on a test where the Type II error rate is controlled are conditional on the assumption of a specific effect size. They allow the inference that, assuming the true effect size is at least as large as that used in the a-priori power analysis, the maximum Type II error rate in a study is not larger than a desired value.

This point is perhaps best illustrated if we consider a study where an a-priori power analysis is performed both for a test of the presence of an effect, as for a test of the absence of an effect. When designing a study, it essential to consider the possibility that there is no effect e.

An a-priori power analysis can be performed both for a null hypothesis significance test, as for a test of the absence of a meaningful effect, such as an equivalence test that can statistically provide support for the null hypothesis by rejecting the presence of effects that are large enough to matter Lakens, ; Meyners, ; Rogers et al.

When multiple primary tests will be performed based on the same sample, each analysis requires a dedicated sample size justification. If possible, a sample size is collected that guarantees that all tests are informative, which means that the collected sample size is based on the largest sample size returned by any of the a-priori power analyses.

Therefore, the researcher should aim to collect participants in total for an informative result for both tests that are planned. This does not guarantee a study has sufficient power for the true effect size which can never be known , but it guarantees the study has sufficient power given an assumption of the effect a researcher is interested in detecting or rejecting.

Therefore, an a-priori power analysis is useful, as long as a researcher can justify the effect sizes they are interested in. If researchers correct the alpha level when testing multiple hypotheses, the a-priori power analysis should be based on this corrected alpha level. An a-priori power analysis can be performed analytically, or by performing computer simulations.

Analytic solutions are faster but less flexible. A common challenge researchers face when attempting to perform power analyses for more complex or uncommon tests is that available software does not offer analytic solutions.

In these cases simulations can provide a flexible solution to perform power analyses for any test Morris et al. All simulations consist of first randomly generating data based on assumptions of the data generating mechanism e.

By computing the percentage of significant results, power can be computed for any design. There is a wide range of tools available to perform power analyses. Whichever tool a researcher decides to use, it will take time to learn how to use the software correctly to perform a meaningful a-priori power analysis.

Resources to educate psychologists about power analysis consist of book-length treatments Aberson, ; Cohen, ; Julious, ; Murphy et al. It is important to be trained in the basics of power analysis, and it can be extremely beneficial to learn how to perform simulation-based power analyses.

At the same time, it is often recommended to enlist the help of an expert, especially when a researcher lacks experience with a power analysis for a specific test.

When reporting an a-priori power analysis, make sure that the power analysis is completely reproducible. If power analyses are performed in R it is possible to share the analysis script and information about the version of the package.

In many software packages it is possible to export the power analysis that is performed as a PDF file. If the software package provides no way to export the analysis, add a screenshot of the power analysis to the supplementary files.

The reproducible report needs to be accompanied by justifications for the choices that were made with respect to the values used in the power analysis. If the effect size used in the power analysis is based on previous research the factors presented in Table 5 if the effect size is based on a meta-analysis or Table 6 if the effect size is based on a single study should be discussed.

If an effect size estimate is based on the existing literature, provide a full citation, and preferably a direct quote from the article where the effect size estimate is reported.

If the effect size is based on a smallest effect size of interest, this value should not just be stated, but justified e.

For an overview of all aspects that should be reported when describing an a-priori power analysis, see Table 4. The goal when justifying a sample size based on precision is to collect data to achieve a desired width of the confidence interval around a parameter estimate.

The width of the confidence interval around the parameter estimate depends on the standard deviation and the number of observations.

The only aspect a researcher needs to justify for a sample size justification based on accuracy is the desired width of the confidence interval with respect to their inferential goal, and their assumption about the population standard deviation of the measure. If a researcher has determined the desired accuracy, and has a good estimate of the true standard deviation of the measure, it is straightforward to calculate the sample size needed for a desired level of accuracy.

The required sample size to achieve this desired level of accuracy assuming normally distributed data can be computed by:. where N is the number of observations, z is the critical value related to the desired confidence interval, sd is the standard deviation of IQ scores in the population, and error is the width of the confidence interval within which the mean should fall, with the desired error rate.

In this example, 1. If a desired accuracy for a non-zero mean difference is computed, accuracy is based on a non-central t -distribution. For these calculations an expected effect size estimate needs to be provided, but it has relatively little influence on the required sample size Maxwell et al.

The MBESS package in R provides functions to compute sample sizes for a wide range of tests Kelley, What is less straightforward is to justify how a desired level of accuracy is related to inferential goals.

There is no literature that helps researchers to choose a desired width of the confidence interval. Morey convincingly argues that most practical use-cases of planning for precision involve an inferential goal of distinguishing an observed effect from other effect sizes for a Bayesian perspective, see Kruschke If the goal is indeed to get an effect size estimate that is precise enough so that two effects can be differentiated with high probability, the inferential goal is actually a hypothesis test, which requires designing a study with sufficient power to reject effects e.

If researchers do not want to test a hypothesis, for example because they prefer an estimation approach over a testing approach, then in the absence of clear guidelines that help researchers to justify a desired level of precision, one solution might be to rely on a generally accepted norm of precision to aim for.

This norm could be based on ideas about a certain resolution below which measurements in a research area no longer lead to noticeably different inferences.

Just as researchers normatively use an alpha level of 0. Future work is needed to help researchers choose a confidence interval width when planning for accuracy. When a researcher uses a heuristic, they are not able to justify their sample size themselves, but they trust in a sample size recommended by some authority.

When I started as a PhD student in it was common to collect 15 participants in each between subject condition.

When asked why this was a common practice, no one was really sure, but people trusted there was a justification somewhere in the literature. Now, I realize there was no justification for the heuristics we used. Some papers provide researchers with simple rules of thumb about the sample size that should be collected.

Such papers clearly fill a need, and are cited a lot, even when the advice in these articles is flawed. Green into the recommendation to collect ~50 observations. We see how a string of mis-citations eventually leads to a misleading rule of thumb.

Regrettably, this advice is now often mis-cited as a justification to collect no more than 50 observations per condition without considering the expected effect size.

If authors justify a specific sample size e. Another common heuristic is to collect the same number of observations as were collected in a previous study.

Using the same sample size as a previous study is only a valid approach if the sample size justification in the previous study also applies to the current study. Instead of stating that you intend to collect the same sample size as an earlier study, repeat the sample size justification, and update it in light of any new information such as the effect size in the earlier study, see Table 6.

Peer reviewers and editors should carefully scrutinize rules of thumb sample size justifications, because they can make it seem like a study has high informational value for an inferential goal even when the study will yield uninformative results.

In most cases, heuristics are based on weak logic, and not widely applicable. It might be possible that fields develop valid heuristics for sample size justifications.

Alternatively, it is possible that a field agrees that data should be collected with a desired level of accuracy, irrespective of the true effect size. In these cases, valid heuristics would exist based on generally agreed goals of data collection. For example, Simonsohn suggests to design replication studies that have 2.

It is the responsibility of researchers to gain the knowledge to distinguish valid heuristics from mindless heuristics, and to be able to evaluate whether a heuristic will yield an informative result given the inferential goal of the researchers in a specific study, or not.

It might sound like a contradictio in terminis , but it is useful to distinguish a final category where researchers explicitly state they do not have a justification for their sample size.

Perhaps the resources were available to collect more data, but they were not used. A researcher could have performed a power analysis, or planned for precision, but they did not.

In those cases, instead of pretending there was a justification for the sample size, honesty requires you to state there is no sample size justification. This is not necessarily bad.

It is still possible to discuss the smallest effect size of interest, the minimal statistically detectable effect, the width of the confidence interval around the effect size, and to plot a sensitivity power analysis, in relation to the sample size that was collected.

If a researcher truly had no specific inferential goals when collecting the data, such an evaluation can perhaps be performed based on reasonable inferential goals peers would have when they learn about the existence of the collected data.

Do not try to spin a story where it looks like a study was highly informative when it was not. Instead, transparently evaluate how informative the study was given effect sizes that were of interest, and make sure that the conclusions follow from the data.

The lack of a sample size justification might not be problematic, but it might mean that a study was not informative for most effect sizes of interest, which makes it especially difficult to interpret non-significant effects, or estimates with large uncertainty. The inferential goal of data collection is often in some way related to the size of an effect.

Therefore, to design an informative study, researchers will want to think about which effect sizes are interesting. First, it is useful to consider three effect sizes when determining the sample size. The first is the smallest effect size a researcher is interested in, the second is the smallest effect size that can be statistically significant only in studies where a significance test will be performed , and the third is the effect size that is expected.

Beyond considering these three effect sizes, it can be useful to evaluate ranges of effect sizes. This can be done by computing the width of the expected confidence interval around an effect size of interest for example, an effect size of zero , and examine which effects could be rejected.

Similarly, it can be useful to plot a sensitivity curve and evaluate the range of effect sizes the design has decent power to detect, as well as to consider the range of effects for which the design has low power.

Finally, there are situations where it is useful to consider a range of effect that is likely to be observed in a specific research area. The strongest possible sample size justification is based on an explicit statement of the smallest effect size that is considered interesting. A smallest effect size of interest can be based on theoretical predictions or practical considerations.

For a review of approaches that can be used to determine a smallest effect size of interest in randomized controlled trials, see Cook et al. It can be challenging to determine the smallest effect size of interest whenever theories are not very developed, or when the research question is far removed from practical applications, but it is still worth thinking about which effects would be too small to matter.

A first step forward is to discuss which effect sizes are considered meaningful in a specific research line with your peers. Researchers will differ in the effect sizes they consider large enough to be worthwhile Murphy et al.

Even though it might be challenging, there are important benefits of being able to specify a smallest effect size of interest. The population effect size is always uncertain indeed, estimating this is typically one of the goals of the study , and therefore whenever a study is powered for an expected effect size, there is considerable uncertainty about whether the statistical power is high enough to detect the true effect in the population.

However, if the smallest effect size of interest can be specified and agreed upon after careful deliberation, it becomes possible to design a study that has sufficient power given the inferential goal to detect or reject the smallest effect size of interest with a certain error rate.

The minimal statistically detectable effect, or the critical effect size, provides information about the smallest effect size that, if observed, would be statistically significant given a specified alpha level and sample size Cook et al. For any critical t value e.

For a two-sided independent t test the critical mean difference is:. This figure is similar to Figure 2 , with the addition that the critical d is indicated. Whether such effect sizes are interesting, and can realistically be expected, should be carefully considered and justified. This reveals that when the sample size is relatively small, the observed effect needs to be quite substantial to be statistically significant.

It is important to realize that due to random variation each study has a probability to yield effects larger than the critical effect size, even if the true effect size is small or even when the true effect size is 0, in which case each significant effect is a Type I error.

Computing a minimal statistically detectable effect is useful for a study where no a-priori power analysis is performed, both for studies in the published literature that do not report a sample size justification Lakens, Scheel, et al.

It can be informative to ask yourself whether the critical effect size for a study design is within the range of effect sizes that can realistically be expected. If not, then whenever a significant effect is observed in a published study, either the effect size is surprisingly larger than expected, or more likely, it is an upwardly biased effect size estimate.

In the latter case, given publication bias, published studies will lead to biased effect size estimates.

If it is still possible to increase the sample size, for example by ignoring rules of thumb and instead performing an a-priori power analysis, then do so. If it is not possible to increase the sample size, for example due to resource constraints, then reflecting on the minimal statistically detectable effect should make it clear that an analysis of the data should not focus on p values, but on the effect size and the confidence interval see Table 3.

Taking into account the minimal statistically detectable effect size should make you reflect on whether a hypothesis test will yield an informative answer, and whether your current approach to sample size justification e.

Although the true population effect size is always unknown, there are situations where researchers have a reasonable expectation of the effect size in a study, and want to use this expected effect size in an a-priori power analysis.

Even if expectations for the observed effect size are largely a guess, it is always useful to explicitly consider which effect sizes are expected. A researcher can justify a sample size based on the effect size they expect, even if such a study would not be very informative with respect to the smallest effect size of interest.

In such cases a study is informative for one inferential goal testing whether the expected effect size is present or absent , but not highly informative for the second goal testing whether the smallest effect size of interest is present or absent.

There are typically three sources for expectations about the population effect size: a meta-analysis, a previous study, or a theoretical model. It is tempting for researchers to be overly optimistic about the expected effect size in an a-priori power analysis, as higher effect size estimates yield lower sample sizes, but being too optimistic increases the probability of observing a false negative result.

When reviewing a sample size justification based on an a-priori power analysis, it is important to critically evaluate the justification for the expected effect size used in power analyses. In a perfect world effect size estimates from a meta-analysis would provide researchers with the most accurate information about which effect size they could expect.

Due to widespread publication bias in science, effect size estimates from meta-analyses are regrettably not always accurate. They can be biased, sometimes substantially so. Furthermore, meta-analyses typically have considerable heterogeneity, which means that the meta-analytic effect size estimate differs for subsets of studies that make up the meta-analysis.

So, although it might seem useful to use a meta-analytic effect size estimate of the effect you are studying in your power analysis, you need to take great care before doing so. If a researcher wants to enter a meta-analytic effect size estimate in an a-priori power analysis, they need to consider three things see Table 5.

First, the studies included in the meta-analysis should be similar enough to the study they are performing that it is reasonable to expect a similar effect size.

In essence, this requires evaluating the generalizability of the effect size estimate to the new study. It is important to carefully consider differences between the meta-analyzed studies and the planned study, with respect to the manipulation, the measure, the population, and any other relevant variables.

Second, researchers should check whether the effect sizes reported in the meta-analysis are homogeneous. If not, and there is considerable heterogeneity in the meta-analysis, it means not all included studies can be expected to have the same true effect size estimate.

A meta-analytic estimate should be used based on the subset of studies that most closely represent the planned study. Third, the meta-analytic effect size estimate should not be biased. Check if the bias detection tests that are reported in the meta-analysis are state-of-the-art, or perform multiple bias detection tests yourself Carter et al.

If a meta-analysis is not available, researchers often rely on an effect size from a previous study in an a-priori power analysis. The first issue that requires careful attention is whether the two studies are sufficiently similar. Just as when using an effect size estimate from a meta-analysis, researchers should consider if there are differences between the studies in terms of the population, the design, the manipulations, the measures, or other factors that should lead one to expect a different effect size.

For example, intra-individual reaction time variability increases with age, and therefore a study performed on older participants should expect a smaller standardized effect size than a study performed on younger participants. If an earlier study used a very strong manipulation, and you plan to use a more subtle manipulation, a smaller effect size should be expected.

Finally, effect sizes do not generalize to studies with different designs. Even if a study is sufficiently similar, statisticians have warned against using effect size estimates from small pilot studies in power analyses. Leon, Davis, and Kraemer write:.

Contrary to tradition, a pilot study does not provide a meaningful effect size estimate for planning subsequent studies due to the imprecision inherent in data from small samples.

The two main reasons researchers should be careful when using effect sizes from studies in the published literature in power analyses is that effect size estimates from studies can differ from the true population effect size due to random variation, and that publication bias inflates effect sizes.

But even if we had access to all effect sizes e. For example, imagine if there is extreme publication bias in the situation illustrated in Figure 6. It is possible to compute an effect size estimate that, based on certain assumptions, corrects for bias. However, if we assume bias is present, we can use the BUCSS R package S.

Anderson et al. A power analysis that takes bias into account under a specific model of publication bias, based on a truncated F distribution where only significant results are published suggests collecting 73 participants in each condition.

It is possible that the bias corrected estimate of the non-centrality parameter used to compute power is zero, in which case it is not possible to correct for bias using this method.

Both these approaches lead to a more conservative power analysis, but not necessarily a more accurate power analysis. If it is not possible to specify a smallest effect size of interest, and there is great uncertainty about which effect size to expect, it might be more efficient to perform a study with a sequential design discussed below.

To summarize, an effect size from a previous study in an a-priori power analysis can be used if three conditions are met see Table 6. First, the previous study is sufficiently similar to the planned study. Second, there was a low risk of bias e. When your theoretical model is sufficiently specific such that you can build a computational model, and you have knowledge about key parameters in your model that are relevant for the data you plan to collect, it is possible to estimate an effect size based on the effect size estimate derived from a computational model.

Although computational models that make point predictions are relatively rare, whenever they are available, they provide a strong justification of the effect size a researcher expects. Confidence intervals represent a range around an estimate that is wide enough so that in the long run the true population parameter will fall inside the confidence intervals - α percent of the time.

A Bayesian estimator who uses an uninformative prior would compute a credible interval with the same or a very similar upper and lower bound Albers et al. Regardless of the statistical philosophy you plan to rely on when analyzing the data, the evaluation of what we can conclude based on the width of our interval tells us that with 15 observation per group we will not learn a lot.

One useful way of interpreting the width of the confidence interval is based on the effects you would be able to reject if the true effect size is 0. In other words, if there is no effect, which effects would you have been able to reject given the collected data, and which effect sizes would not be rejected, if there was no effect?

The width of the confidence interval tells you that you can only reject the presence of effects that are so large, if they existed, you would probably already have noticed them. Even without data, in most research lines we would not consider certain large effects plausible although the effect sizes that are plausible differ between fields, as discussed below.